AI Implementation Strategy: A Step-by-Step Guide for Business Leaders

Artificial Intelligence has moved beyond early experimentation in the UK as we see automation and decision intelligence becoming a part of the day-to-day work. Yet, many businesses still struggle to translate interest into execution.

Budgets for AI are poised to grow nearly 40% in the next two years. The issue is, even with this momentum, only 25% of companies report measurable ROI.

This paradox defines today’s AI implementation: high investment, low realisation.

Your boardroom wants an AI roadmap. But leaders may remain stuck on knowing how to implement AI effectively in business.

Use this guide to implement AI strategy framework with confidence.

Why Most AI Strategies Stall?

Every business wants to be an early adopter of AI, but Gartner estimates that around 60 % of AI projects will be abandoned (by 2026) because supporting data infrastructure may not be ready.

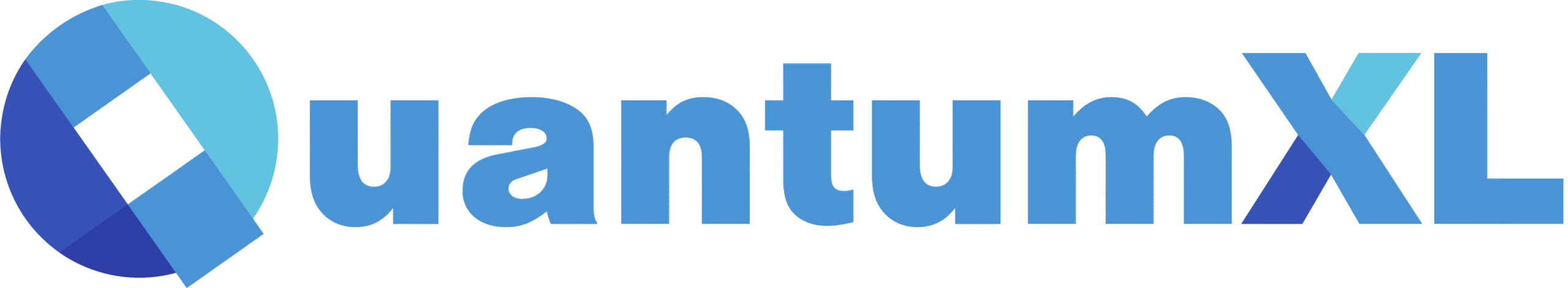

Another reason is talent, as Salesforce research finds six in ten IT professionals cite a shortage of AI skills.

Across sectors, these issues follow a recurring execution pattern:

- Pilots run in isolation rather than within live workflows that can convert predictions into outcomes.

- Leaders treat AI as a project rather than a capability, which limits investment in skills, infrastructure, and iteration.

- Accountability also remains loose with no agreed metrics or ownership, leading to a lack of organisational confidence.

The difference between stalled and successful programs comes down to execution discipline. That’s exactly what we’ll explore next: bringing a structure into an AI strategy.

7 Steps AI Implementation Strategy to Drive Measurable Business Value

Every practical AI strategy framework starts with clarity about knowing what to solve, how to measure progress, and where AI creates measurable business outcomes.

These stages form a roadmap for leaders seeking to implement AI in business with purpose.

PHASE I: Foundation of AI Implementation Strategy

Although infrastructure enables AI success, it begins with strategic intent: understanding why adoption matters and where it delivers measurable outcomes.

Tesco used AI-led demand forecasting to cut waste and improve availability. The gain tied directly to margin improvement and stock accuracy.

Here’s what it takes.

Step 1: Define Business Problems, Objectives, and Outcomes

Leaders start by defining success before introducing models or tools. You don’t want the AI pilots failing to reach production due to unclear objectives, just as the rest of the 88%.

Linking every AI goal to business impact through three practical actions.

a) Move beyond “improve efficiency” to measurable outcomes

Measure the success in the same way that leadership uses to judge performance.

Which is: revenue per customer, margin contribution, cost-to-serve, or forecast accuracy.

The intent is to convert efficiency into a visible business impact rather than an abstract improvement.

Let each AI initiative be framed as a hypothesis linked to clear business drivers:

“If AI-driven forecasting improves accuracy by 15%, what is the expected margin impact?”

b) Anchor AI goals in real business pain points and KPIs

Next, drive clarity by identifying where your business struggles. Maybe it’s due to inefficiencies slowing growth, high churn, delayed deal closures, or others.

Make sure that data from real-world operations, such as sales systems, customer feedback, or production metrics, supports these challenges.

c) Tie AI goals to financial KPIs and business drivers

Then, connect every AI use case to outcomes leadership already measures. If automation frees up time, reduces operating costs, and AI improves forecasting, showcase how it builds cash flow or optimises resource planning.

This three-part perspective can help connect AI outcomes with business value:

- Revenue driver: Identifying high-value accounts and recommend relevant upsell or cross-sell opportunities.

- Cost driver: Automating customer support and billing workflows to reduce manual effort and improve response efficiency.

- Profit integration: Optimise pricing, forecasting, and resource allocation to build solid profitability.

Step 2: Conduct AI Readiness Assessment

The OneAdvanced UK Trends Report shows that 36% of organisations attempting AI integration have already failed.

One such example is the NHS, which didn’t take off with AI adoption due to “a lack of appropriate digital and data infrastructure… barrier to scaling AI solutions”

a) Evaluate data systems, quality, and governance

Audit your data pipelines and assign explicit ownership for maintaining information. Clear accountability helps teams spot incomplete or disconnected datasets early, which keeps AI outputs reliable and reduces downstream friction.

Key actions include:

- Recording all data sources and classifying them (accessibility, accuracy, update frequency, etc.)

- Checking the data completeness and consistency to spot gaps early

- Reconsidering governance policies to have GDPR, data-sharing agreements, etc. in place

b) Assess infrastructure and organisational capability

Check for the readiness amongst people and systems that carry the workload. For this, investigate if your current infrastructure and operating model can sustain continuous AI delivery at scale.

For this:

- Review compute and storage capacity, training and inference environments

- Assessing workflow readiness to make sure teams can deploy, monitor, and update AI models

- Review teams to see where skills are missing (data, change management, etc.)

c) Use readiness templates and maturity scoring

See if your organisation is AI-ready through measurable benchmarks. Implement scoring templates that rate each key area: data quality, infrastructure, talent, and more.

In fact, this AI readiness assessment by CISCO can help make implementation measurable and repeatable.

Core activities involve:

- Creating a maturity matrix with ratings (1–5) across core dimensions: data, infrastructure, talent, and governance.

- Using gap-analysis templates to convert assessment findings into clear priorities. For instance, if data availability scored a 2, outline an action such as “integrate siloed sources through a unified data pipeline by Q3.”

Step 3: Build a Cross-Functional AI Task Force

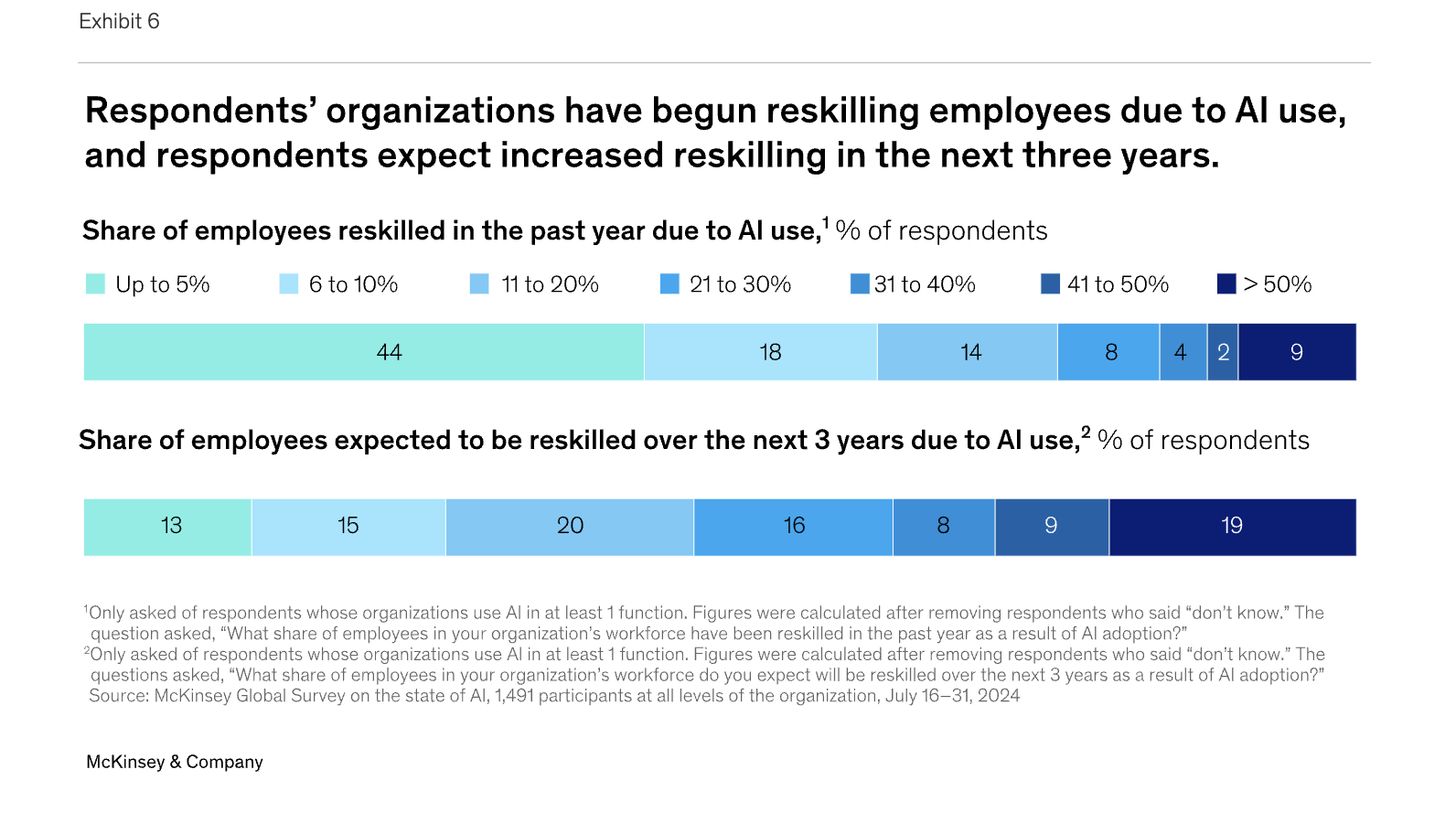

People remain at the heart of implementation, no matter what. So make sure that your team is AI ready as McKinsey’s State of AI 2025 survey found that over 40% of organisations have already begun reskilling employees due to AI adoption.

a) Skills gap analysis and talent strategy

A major challenge is aligning technical, analytical, and leadership skills within one coherent talent plan. Think of building teams that can operate similarly to how NatWest’s AI Academy trained thousands of employees in data literacy and responsible AI, for starters.

Leaders will have to:

- Identify the roles most likely to evolve through automation or augmentation

- Use capability matrices to spot gaps in data literacy, model governance, and AI-informed decision-making

- Reskill roles based on their influence on business outcomes and not just taskboards

b) Combine business leaders, data scientists, and process owners

AI delivers the best results when technical and business perspectives work in sync. Usually, cross-functional teams unite data experts, process owners, and business leads into one execution unit.

Their shared goal is to turn technical capability into practical outcomes, such as models built to solve defined business problems.

As for the working:

- Assign clear ownership to each AI initiative and share KPIs across functions

- Hold joint design sessions where business units define problems and data scientists shape models collaboratively

c) Implement training frameworks for AI adoption

Employees need continuous exposure to AI use-cases within their domain.

IBM reports that in the UK, only 45% of enterprises currently provide company-wide or role-specific AI training. Also, 67% of leaders cite internal resistance and cultural barriers as key blockers to adoption.

That’s why Skills England is launching a nationwide initiative to train 7.5 million workers to unlock up to £400bn in growth potential by 2030.

Leaders looking to convert reskilling into readiness may:

- Run internal learning labs where teams experiment safely with new tools

- Combine vendor training with in-house contextual learning so relevance stays high

- Use performance dashboards to measure the impact of reskilling on adoption and productivity

PHASE II: How to implement AI in business

The execution takes the front seat as focus shifts from proving AI’s potential to realising its impact at scale.

There are two essential steps to follow in this phase, where success depends on how effectively organisations replicate what works and embed it into daily operations.

Step 4: Scaling Successful Pilots

AI pilots run a test where the technology fits and how it performs under real conditions. For example, Ocado leveraged AI across routing, robotics, and fulfilment, highlighting that integrating it into existing systems (vs. standalone pilots) creates compounding value.

There are three major parts of the pilot rollout.

a) Infrastructure requirements for enterprise deployment

Scaling AI depends on how well-prepared your data environment is and whether it is supported by the right compute foundation. For instance, a recommendation model that performs well in a small marketing test will need stronger data connections and clearer access controls once it moves into production.

Leaders will:

- Review computing resources to make sure there’s enough processing capacity and storage

- Build stable data pipelines that move information reliably between departments

- Connect AI outputs directly into existing systems (e.g., CRM, finance dashboards) for continuous use

b) Performance monitoring and continuous improvement loops

Once deployed, AI systems need steady monitoring through clear checkpoints. Review how often predictions align with real outcomes and how reliably the system performs in live environments.

If accuracy drops or responses are slow, it means the model no longer reflects current data patterns.

Review loops managed by data and business teams can:

- Compare predictions to recent business results on a set schedule

ii. Report performance drops early through automated reports.

iii. Refresh models with updated data once key metrics fall below the target

Tools like Evidently AI, Neptune.ai, or MLflow can automate model tracking, monitor data drift, and visualise performance over time.

An ideal review mechanism will involve:

- Defining measurable baselines for accuracy and decision speed tied to business KPIs

- Automate performance tracking to identify early shifts in model accuracy and highlight any decline through monitoring dashboards.

- Retrain regularly to use new datasets to refresh models using tools like SageMaker

- Feed insights back by capturing how end users respond, and let those patterns guide retraining

c) Design controlled pilots tied to ROI and adoption outcomes

An AI pilot must validate two dimensions: measurable business value and operational fit.

Next, link it to ROI so leaders can check whether it offers a clear path to scale.

Gauge ROI readiness by:

- Tying each pilot KPI to a financial metric such as margin improvement, lead-to-cash acceleration, or cost per transaction

- Benchmark AI-enabled workflows against existing baselines that measure real performance gains

- Calculate sustainable returns by accounting for the full cost of running the system, which includes compute requirements and recurring maintenance

Step 5: Production and Governance Framework for AI

The real success of AI integration depends on execution control because MIT research found that 95% of generative AI pilots fail to deliver measurable business impact. That’s where you want clear governance before moving pilots into production for systems to remain accountable and financially traceable.

a) Production Architecture and Deployment Controls

AI systems moving into real-world use will require each model must follow a structured release cycle. It is applicable to testing, validation, approval, and monitoring, with steps defined and documented.

The key focus is on:

- Deployment pipeline that automates testing for release management through CI/CD platforms such as GitLab, Azure ML, or AWS SageMaker

- Change control to maintain detailed logs of dataset versions

- Monitoring runtime to track model accuracy vs. error rates against benchmarks

b) Responsible AI and Compliance Alignment

Deployment is a continuous cycle where each model drives traceability. It’s about tracking changelogs and reviewing the impact it has on making anything live. Bringing governance into this flow keeps the system reliable as it grows.

An effective entry point would be to have an internal AI governance board with clear accountability, with these action points:

- Aligning policies with the UK’s AI Regulatory Principles of safety, transparency, fairness, accountability, and contestability

- Using industry frameworks, such as the OECD AI Principles and the EU AI Act guidance, for cross-border compliance.

- Implement bias-testing and interpretability tools, such as IBM AI Fairness 360 or the Google What-If Tool, that improve model transparency

PHASE III: Scale and Optimise AI strategy framework

By now, the framework is established, and deployment is underway. What you want next is to expand AI across functions and refine its outputs based on measurable feedback. It’s about building AI into the organisation’s workflow loop that can learn from outcomes to drive steady value creation.

Step 6: Integrate and Scale What Works

Once there’s a measurable value attached to AI pilots, the focus shifts to implementing those outcomes into everyday operations. The AI business Integration is about consistency with the intent to make AI a natural extension of existing systems.

a) AI business Integration with existing business processes and systems

AI works best when it builds on what people already do and maps where decisions intersect with tools such as CRMs, ERPs, or analytics dashboards. The objective is to make insights actionable by aligning data pipelines with governance so that models feed directly into operational tools that employees already use.

For an effective integration, treat it as a refinement that requires:

- Reviewing workflows and identifying decision points that benefit from predictive recommendations

- Connect systems via APIs or middleware to facilitate real-time data exchange between AI models and operational platforms

- Standardise outputs so insights appear form of dashboards that teams already rely on

b) Move successful pilots into production

The switch from pilot to production must come with the right architecture and workflow design. Each stage must establish stability and repeatability so that you can transition proven models through a defined production pipeline.

Here, the following steps can reduce deployment risk:

- Automated deployment through CI/CD frameworks such as Azure ML or Vertex AI to help maintain version control

- Continuous data validation helps check data freshness and model reliability in case new information flows in

- Redesign workflows so AI handles the structured steps and teams focus on judgment. It creates a steady flow where automation moves the work forward and people shape the final call.

Step 7: Measure, Iterate, and Expand Capabilities

Optimisation begins when AI performance is integrated into different business workflows. It’s a last step that focuses on delivering value even in the case of data or user behaviour shifts.

Three major activities shape the success here.

a) Use of performance feedback loops and business KPIs

Periodic reviews map AI performance to whichever business indicator is most sensitive at that point in the cycle. Dashboards help express those results in financial terms, giving leaders a clearer view of commercial impact.

b) Refine models, processes, and cultural adoption

AI adaptation requires constant refinements because data changes and businesses continually evolve. There’s a need for technical maintenance and cultural reinforcement within teams. So when teams approach refinement, there’s a dual agenda: building habits of trust, interpretation, and data-driven decision-making.

To maintain operational fit:

- Retrain with current data as you update models by using the latest transactions and market signals

- Retire low-value models and phase out algorithms that no longer generate measurable lift

- Review workflow impact by measuring whether the accuracy of automation improves employee efficiency

c) Continuous ROI measurement and optimisation

Each AI project will require a routine financial review to match outcomes with the investment. ROI tracking becomes a financial exercise where numbers must demonstrate that AI improves efficiency and profitability over time.

Key practical checkpoints for leaders:

- Setting up a quarterly review cycle to compare revenue lift against baseline forecasts

- Include cloud spend, data maintenance, retraining effort, and integration overhead in one holistic cost line so ROI reflects reality

- Correlate AI’s output with measurable shifts in margin

Conclusion

True AI maturity begins when the technology becomes part of how the business thinks in the direction of growth. You can define an AI strategy framework, but it should evolve into a repeatable system.

For leaders, it means chasing more than just the following AI use case. It’s about pivoting to conditions where every use case supports the next. That’s why the challenge is no longer about adopting AI, but about implementing AI in business so it integrates with existing workflows, governance, and culture.

If your organisation is ready to move past experimentation, consult our AI implementation specialists. We’ll make sure that AI remains a continuous source of insight and improvement across your growth decisions.

Ready to turn AI strategy into measurable business value?